Browse Source

Merge pull request #1 from github/initial-import

Initial import of OSS OctoDNSpull/2/head

committed by

GitHub

GitHub

81 changed files with 15272 additions and 0 deletions

Split View

Diff Options

-

+11 -0.git_hooks_pre-commit

-

+53 -0.github/CONTRIBUTING.md

-

+11 -0.gitignore

-

+7 -0.travis.yml

-

+22 -0LICENSE

-

+219 -0README.md

-

BINdocs/assets/deploy.png

-

BINdocs/assets/noop.png

-

BINdocs/assets/pr.png

-

+8 -0octodns/__init__.py

-

+6 -0octodns/cmds/__init__.py

-

+69 -0octodns/cmds/args.py

-

+29 -0octodns/cmds/compare.py

-

+25 -0octodns/cmds/dump.py

-

+99 -0octodns/cmds/report.py

-

+37 -0octodns/cmds/sync.py

-

+22 -0octodns/cmds/validate.py

-

+309 -0octodns/manager.py

-

+6 -0octodns/provider/__init__.py

-

+116 -0octodns/provider/base.py

-

+249 -0octodns/provider/cloudflare.py

-

+349 -0octodns/provider/dnsimple.py

-

+651 -0octodns/provider/dyn.py

-

+361 -0octodns/provider/powerdns.py

-

+651 -0octodns/provider/route53.py

-

+82 -0octodns/provider/yaml.py

-

+549 -0octodns/record.py

-

+6 -0octodns/source/__init__.py

-

+33 -0octodns/source/base.py

-

+208 -0octodns/source/tinydns.py

-

+79 -0octodns/yaml.py

-

+117 -0octodns/zone.py

-

+6 -0requirements-dev.txt

-

+17 -0requirements.txt

-

+35 -0script/bootstrap

-

+30 -0script/cibuild

-

+30 -0script/coverage

-

+21 -0script/lint

-

+15 -0script/sdist

-

+28 -0script/test

-

+46 -0setup.py

-

+4 -0tests/config/bad-provider-class-module.yaml

-

+4 -0tests/config/bad-provider-class-no-module.yaml

-

+4 -0tests/config/bad-provider-class.yaml

-

+1 -0tests/config/empty.yaml

-

+3 -0tests/config/missing-provider-class.yaml

-

+4 -0tests/config/missing-provider-config.yaml

-

+6 -0tests/config/missing-provider-env.yaml

-

+3 -0tests/config/missing-sources.yaml

-

+13 -0tests/config/no-dump.yaml

-

+13 -0tests/config/simple-validate.yaml

-

+35 -0tests/config/simple.yaml

-

+10 -0tests/config/subzone.unit.tests.yaml

-

+108 -0tests/config/unit.tests.yaml

-

+28 -0tests/config/unknown-provider.yaml

-

+8 -0tests/config/unordered.yaml

-

+188 -0tests/fixtures/cloudflare-dns_records-page-1.json

-

+116 -0tests/fixtures/cloudflare-dns_records-page-2.json

-

+140 -0tests/fixtures/cloudflare-zones-page-1.json

-

+140 -0tests/fixtures/cloudflare-zones-page-2.json

-

+106 -0tests/fixtures/dnsimple-invalid-content.json

-

+314 -0tests/fixtures/dnsimple-page-1.json

-

+138 -0tests/fixtures/dnsimple-page-2.json

-

+4190 -0tests/fixtures/dyn-traffic-director-get.json

-

+235 -0tests/fixtures/powerdns-full-data.json

-

+69 -0tests/helpers.py

-

+203 -0tests/test_octodns_manager.py

-

+170 -0tests/test_octodns_provider_base.py

-

+273 -0tests/test_octodns_provider_cloudflare.py

-

+202 -0tests/test_octodns_provider_dnsimple.py

-

+1155 -0tests/test_octodns_provider_dyn.py

-

+290 -0tests/test_octodns_provider_powerdns.py

-

+1145 -0tests/test_octodns_provider_route53.py

-

+111 -0tests/test_octodns_provider_yaml.py

-

+765 -0tests/test_octodns_record.py

-

+176 -0tests/test_octodns_source_tinydns.py

-

+61 -0tests/test_octodns_yaml.py

-

+174 -0tests/test_octodns_zone.py

-

BINtests/zones/.is-needed-for-tests

-

+48 -0tests/zones/example.com

-

+7 -0tests/zones/other.foo

+ 11

- 0

.git_hooks_pre-commit

View File

| @ -0,0 +1,11 @@ | |||

| #!/bin/sh | |||

| set -e | |||

| HOOKS=`dirname $0` | |||

| GIT=`dirname $HOOKS` | |||

| ROOT=`dirname $GIT` | |||

| source $ROOT/env/bin/activate | |||

| $ROOT/script/lint | |||

| $ROOT/script/test | |||

+ 53

- 0

.github/CONTRIBUTING.md

View File

| @ -0,0 +1,53 @@ | |||

| # Contributing | |||

| Hi there! We're thrilled that you'd like to contribute to OctoDNS. Your help is essential for keeping it great. | |||

| Please note that this project adheres to the [Open Code of Conduct](http://todogroup.org/opencodeofconduct/#GitHub%20OctoDNS/opensource@github.com). By participating in this project you agree to abide by its terms. | |||

| If you have questions, or you'd like to check with us before embarking on a major development effort, please [open an issue](https://github.com/github/octodns/issues/new). | |||

| ## How to contribute | |||

| This project uses the [GitHub Flow](https://guides.github.com/introduction/flow/). That means that the `master` branch is stable and new development is done in feature branches. Feature branches are merged into the `master` branch via a Pull Request. | |||

| 0. Fork and clone the repository | |||

| 0. Configure and install the dependencies: `script/bootstrap` | |||

| 0. Make sure the tests pass on your machine: `script/test` | |||

| 0. Create a new branch: `git checkout -b my-branch-name` | |||

| 0. Make your change, add tests, and make sure the tests still pass | |||

| 0. Make sure that `./script/lint` passes without any warnings | |||

| 0. Make sure that coverage is at :100:% `script/coverage` and open `htmlcov/index.html` | |||

| * You can open PRs for :eyes: & discussion prior to this | |||

| 0. Push to your fork and submit a pull request | |||

| We will handle updating the version, tagging the release, and releasing the gem. Please don't bump the version or otherwise attempt to take on these administrative internal tasks as part of your pull request. | |||

| Here are a few things you can do that will increase the likelihood of your pull request being accepted: | |||

| * Follow [pep8](https://www.python.org/dev/peps/pep-0008/) | |||

| - Write thorough tests. No PRs will be merged without :100:% code coverage. More than that tests should be very thorough and cover as many (edge) cases as possible. We're working with DNS here and bugs can have a major impact so we need to do as much as reasonably possible to ensure quality. While :100:% doesn't even begin to mean there are no bugs, getting there often requires close inspection & a relatively complete understanding of the code. More times than no the endevor will uncover at least minor problems. | |||

| - Bug fixes require specific tests covering the addressed behavior. | |||

| - Write or update documentation. If you have added a feature or changed an existing one, please make appropriate changes to the docs. Doc-only PRs are always welcome. | |||

| - Keep your change as focused as possible. If there are multiple changes you would like to make that are not dependent upon each other, consider submitting them as separate pull requests. | |||

| - We target Python 2.7, but have taken steps to make Python 3 support as easy as possible when someone decides it's needed. PR welcome. | |||

| - Write a [good commit message](http://tbaggery.com/2008/04/19/a-note-about-git-commit-messages.html). | |||

| ## License note | |||

| We can only accept contributions that are compatible with the MIT license. | |||

| It's OK to depend on gems licensed under either Apache 2.0 or MIT, but we cannot add dependencies on any gems that are licensed under GPL. | |||

| Any contributions you make must be under the MIT license. | |||

| ## Resources | |||

| - [Contributing to Open Source on GitHub](https://guides.github.com/activities/contributing-to-open-source/) | |||

| - [Using Pull Requests](https://help.github.com/articles/using-pull-requests/) | |||

| - [GitHub Help](https://help.github.com) | |||

+ 11

- 0

.gitignore

View File

| @ -0,0 +1,11 @@ | |||

| *.pyc | |||

| .coverage | |||

| .env | |||

| coverage.xml | |||

| dist/ | |||

| env/ | |||

| htmlcov/ | |||

| nosetests.xml | |||

| octodns.egg-info/ | |||

| output/ | |||

| tmp/ | |||

+ 7

- 0

.travis.yml

View File

| @ -0,0 +1,7 @@ | |||

| language: python | |||

| python: | |||

| - 2.7 | |||

| script: ./script/cibuild | |||

| notifications: | |||

| email: | |||

| - ross@github.com | |||

+ 22

- 0

LICENSE

View File

| @ -0,0 +1,22 @@ | |||

| Copyright (c) 2017 GitHub, Inc. | |||

| Permission is hereby granted, free of charge, to any person | |||

| obtaining a copy of this software and associated documentation | |||

| files (the "Software"), to deal in the Software without | |||

| restriction, including without limitation the rights to use, | |||

| copy, modify, merge, publish, distribute, sublicense, and/or sell | |||

| copies of the Software, and to permit persons to whom the | |||

| Software is furnished to do so, subject to the following | |||

| conditions: | |||

| The above copyright notice and this permission notice shall be | |||

| included in all copies or substantial portions of the Software. | |||

| THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, | |||

| EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES | |||

| OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND | |||

| NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT | |||

| HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, | |||

| WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING | |||

| FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR | |||

| OTHER DEALINGS IN THE SOFTWARE. | |||

+ 219

- 0

README.md

View File

| @ -0,0 +1,219 @@ | |||

| # OctoDNS | |||

| ## DNS as code - Tools for managing DNS across multiple providers | |||

| In the vein of [infrastructure as | |||

| code](https://en.wikipedia.org/wiki/Infrastructure_as_Code) OctoDNS provides a set of tools & patterns that make it easy to manage your DNS records across multiple providers. The resulting config can live in a repository and be [deployed](https://github.com/blog/1241-deploying-at-github) just like the rest of your code, maintaining a clear history and using your existing review & workflow. | |||

| The architecture is pluggable and the tooling is flexible to make it applicable to a wide variety of use-cases. Effort has been made to make adding new providers as easy as possible. In the simple case that involves writing of a single `class` and a couple hundred lines of code, most of which is translating between the provider's schema and OctoDNS's. More on some of the ways we use it and how to go about extending it below and in the [/docs directory](/docs). | |||

| It is similar to [Netflix/denominator](https://github.com/Netflix/denominator). | |||

| ## Getting started | |||

| ### Workspace | |||

| Running through the following commands will install the latest release of OctoDNS and set up a place for your config files to live. | |||

| ``` | |||

| $ mkdir dns | |||

| $ cd dns | |||

| $ virtualenv env | |||

| ... | |||

| $ source env/bin/activate | |||

| $ pip install octodns | |||

| $ mkdir config | |||

| ``` | |||

| ### Config | |||

| We start by creating a config file to tell OctoDNS about our providers and the zone(s) we want it to manage. Below we're setting up a `YamlProvider` to source records from our config files and both a `Route53Provider` and `DynProvider` to serve as the targets for those records. You can have any number of zones set up and any number of sources of data and targets for records for each. You can also have multiple config files, that make use of separate accounts and each manage a distinct set of zones. A good example of this this might be `./config/staging.yaml` & `./config/production.yaml`. We'll focus on a `config/production.yaml`. | |||

| ```yaml | |||

| --- | |||

| providers: | |||

| config: | |||

| class: octodns.provider.yaml.YamlProvider | |||

| directory: ./config | |||

| dyn: | |||

| class: octodns.provider.dyn.DynProvider | |||

| customer: 1234 | |||

| username: 'username' | |||

| password: env/DYN_PASSWORD | |||

| route53: | |||

| class: octodns.provider.route53.Route53Provider | |||

| access_key_id: env/AWS_ACCESS_KEY_ID | |||

| secret_access_key: env/AWS_SECRET_ACCESS_KEY | |||

| zones: | |||

| example.com.: | |||

| sources: | |||

| - config | |||

| targets: | |||

| - dyn | |||

| - route53 | |||

| ``` | |||

| `class` is a special key that tells OctoDNS what python class should be loaded. Any other keys will be passed as configuration values to that provider. In general any sensitive or frequently rotated values should come from environmental variables. When OctoDNS sees a value that starts with `env/` it will look for that value in the process's environment and pass the result along. | |||

| Now that we have something to tell OctoDNS about our providers & zones we need to tell it about or records. We'll keep it simple for now and just create a single `A` record at the top-level of the domain. | |||

| `config/example.com.yaml` | |||

| ```yaml | |||

| --- | |||

| '': | |||

| ttl: 60 | |||

| type: A | |||

| values: | |||

| - 1.2.3.4 | |||

| - 1.2.3.5 | |||

| ``` | |||

| ### Noop | |||

| We're ready to do a dry-run with our new setup to see what changes it would make. Since we're pretending here we'll act like there are no existing records for `example.com.` in our accounts on either provider. | |||

| ``` | |||

| $ octodns-sync --config-file=./config/production.yaml | |||

| ... | |||

| ******************************************************************************** | |||

| * example.com. | |||

| ******************************************************************************** | |||

| * route53 (Route53Provider) | |||

| * Create <ARecord A 60, example.com., [u'1.2.3.4', '1.2.3.5']> | |||

| * Summary: Creates=1, Updates=0, Deletes=0, Existing Records=0 | |||

| * dyn (DynProvider) | |||

| * Create <ARecord A 60, example.com., [u'1.2.3.4', '1.2.3.5']> | |||

| * Summary: Creates=1, Updates=0, Deletes=0, Existing Records=0 | |||

| ******************************************************************************** | |||

| ... | |||

| ``` | |||

| There will be other logging information presented on the screen, but successful runs of sync will always end with a summary like the above for any providers & zones with changes. If there are no changes a message saying so will be printed instead. Above we're creating a new zone in both providers so they show the same change, but that doesn't always have to be the case. If to start one of them had a different state you would see the changes OctoDNS intends to make to sync them up. | |||

| ### Making changes | |||

| **WARNING**: OctoDNS assumes ownership of any domain you point it to. When you tell it to act it will do whatever is necessary to try and match up states including deleting any unexpected records. Be careful when playing around with OctoDNS. It's best to experiment with a fake zone or one without any data that matters until your comfortable with the system. | |||

| Now it's time to tell OctoDNS to make things happen. We'll invoke it again with the same options and add a `--doit` on the end to tell it this time we actually want it to try and make the specified changes. | |||

| ``` | |||

| $ octodns-sync --config-file=./config/production.yaml --doit | |||

| ... | |||

| ``` | |||

| The output here would be the same as before with a few more log lines at the end as it makes the actual changes. After which the config in Route53 and Dyn should match what's in the yaml file. | |||

| ### Workflow | |||

| In the above case we manually ran OctoDNS from the command line. That works and it's better than heading into the provider GUIs and making changes by clicking around, but OctoDNS is designed to be run as part of a deploy process. The implementation details are well beyond the scope of this README, but here is an example of the workflow we use at GitHub. It follows the way [GitHub itself is branch deployed](https://githubengineering.com/deploying-branches-to-github-com/). | |||

| The first step is to create a PR with your changes. | |||

|  | |||

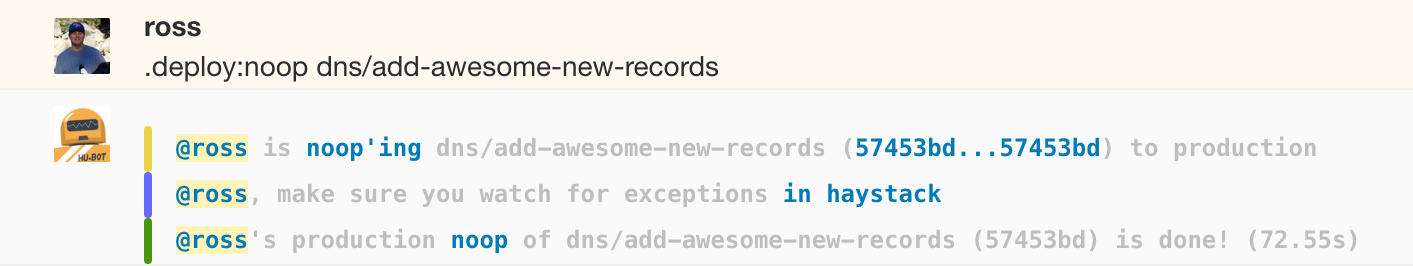

| Assuming the code tests and config validation statuses are green the next step is to do a noop deploy and verify that the changes OctoDNS plans to make are the ones you expect. | |||

|  | |||

| After that comes a set of reviews. One from a teammate who should have full context on what you're trying to accomplish and visibility in to the changes you're making to do it. The other is from a member of the team here at GitHub that owns DNS, mostly as a sanity check and to make sure that best practices are being followed. As much of that as possible is baked into `octodns-validate`. | |||

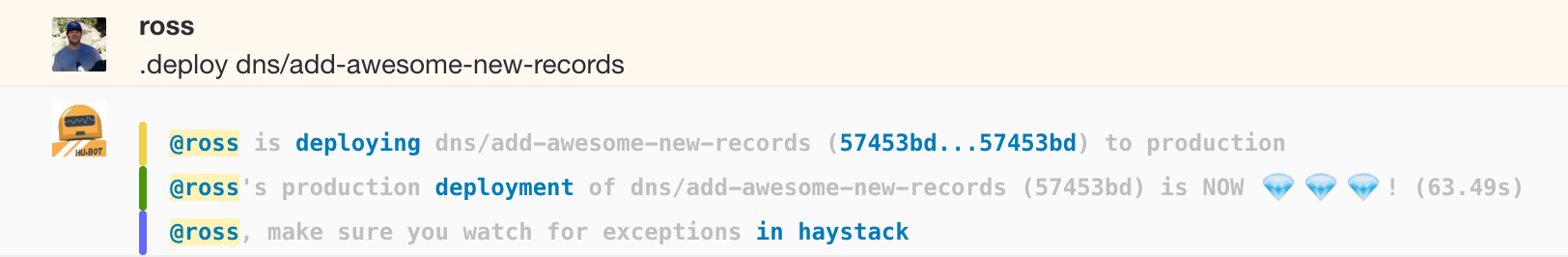

| After the reviews it's time to branch deploy the change. | |||

|  | |||

| If that goes smoothly, you again see the expected changes, and verify them with `dig` and/or `octodns-report` you're good to hit the merge button. If there are problems you can quickly do a `.deploy dns/master` to go back to the previous state. | |||

| ### Bootstrapping config files | |||

| Very few situations will involve starting with a blank slate which is why there's tooling built in to pull existing data out of providers into a matching config file. | |||

| ``` | |||

| $ octodns-dump --config-file=config/production.yaml --output-dir=tmp/ example.com. route53 | |||

| 2017-03-15T13:33:34 INFO Manager __init__: config_file=tmp/production.yaml | |||

| 2017-03-15T13:33:34 INFO Manager dump: zone=example.com., sources=('route53',) | |||

| 2017-03-15T13:33:36 INFO Route53Provider[route53] populate: found 64 records | |||

| 2017-03-15T13:33:36 INFO YamlProvider[dump] plan: desired=example.com. | |||

| 2017-03-15T13:33:36 INFO YamlProvider[dump] plan: Creates=64, Updates=0, Deletes=0, Existing Records=0 | |||

| 2017-03-15T13:33:36 INFO YamlProvider[dump] apply: making changes | |||

| ``` | |||

| The above command pulled the existing data out of Route53 and placed the results into `tmp/example.com.yaml`. That file can be inspected and moved into `config/` to become the new source. If things are working as designed a subsequent noop sync should show zero changes. | |||

| ## Custom Sources and Providers | |||

| You can check out the [source](/octodns/source/) and [provider](/octodns/provider/) directory to see what's currently supported. Sources act as a source of record information. TinyDnsProvider is currently the only OSS source, though we have several others internally that are specific to our environment. These include something to pull host data from [gPanel](https://githubengineering.com/githubs-metal-cloud/) and a similar provider that sources information about our network gear to create both `A` & `PTR` records for their interfaces. Things that might make good OSS sources might include an `ElbSource` that pulls information about [AWS Elastic Load Balancers](https://aws.amazon.com/elasticloadbalancing/) and dynamically creates `CNAME`s for them, or `Ec2Source` that pulls instance information so that records can be created for hosts similar to how our `GPanelProvider` works. An `AxfrSource` could be really interesting as well. Another case where a source may make sense is if you'd like to export data from a legacy service that you have no plans to push changes back into. | |||

| Most of the things included in OctoDNS are providers, the obvious difference being that they can serve as both sources and targets of data. We'd really like to see this list grow over time so if you use an unsupported provider then PRs are welcome. The existing providers should serve as reasonable examples. Those that have no GeoDNS support are relatively straightforward. Unfortunately most of the APIs involved to do GeoDNS style traffic management are complex and somewhat inconsistent so adding support for that function would be nice, but is optional and best done in a separate pass. | |||

| The `class` key in the providers config section can be used to point to arbitrary classes in the python path so internal or 3rd party providers can easily be included with no coordiation beyond getting them into PYTHONPATH, most likely installed into the virtualenv with OctoDNS. | |||

| ## Other Uses | |||

| ### Syncing between providers | |||

| While the primary use-case is to sync a set of yaml config files up to one or more DNS providers, OctoDNS has been built in such a way that you can easily source and target things arbitrarily. As a quick example the config below would sync `githubtest.net.` from Route53 to Dyn. | |||

| ```yaml | |||

| --- | |||

| providers: | |||

| route53: | |||

| class: octodns.provider.route53.Route53Provider | |||

| access_key_id: env/AWS_ACCESS_KEY_ID | |||

| secret_access_key: env/AWS_SECRET_ACCESS_KEY | |||

| dyn: | |||

| class: octodns.provider.dyn.DynProvider | |||

| customer: env/DYN_CUSTOMER | |||

| username: env/DYN_USERNAME | |||

| password: env/DYN_PASSWORD | |||

| zones: | |||

| githubtest.net.: | |||

| sources: | |||

| - route53 | |||

| targets: | |||

| - dyn | |||

| ``` | |||

| ### Dynamic sources | |||

| Internally we use custom sources to create records based on dynamic data that changes frequently without direct human intervention. An example of that might look something like the following. For hosts this mechanism is janitorial, run periodically, making sure the correct records exist as long as the host is alive and ensuring they are removed after the host is destroyed. The host provisioning and destruction processes do the actual work to create and destroy the records. | |||

| ```yaml | |||

| --- | |||

| providers: | |||

| gpanel-site: | |||

| class: github.octodns.source.gpanel.GPanelProvider | |||

| host: 'gpanel.site.github.foo' | |||

| token: env/GPANEL_SITE_TOKEN | |||

| powerdns-site: | |||

| class: octodns.provider.powerdns.PowerDnsProvider | |||

| host: 'internal-dns.site.github.foo' | |||

| api_key: env/POWERDNS_SITE_API_KEY | |||

| zones: | |||

| hosts.site.github.foo.: | |||

| sources: | |||

| - gpanel-site | |||

| targets: | |||

| - powerdns-site | |||

| ``` | |||

| ## Contributing | |||

| Please see our [contributing document](/.github/CONTRIBUTING.md) if you would like to participate! | |||

| ## Getting help | |||

| If you have a problem or suggestion, please [open an issue](https://github.com/github/octodns/issues/new) in this repository, and we will do our best to help. Please note that this project adheres to the [Open Code of Conduct](http://todogroup.org/opencodeofconduct/#GitHub%20OctoDNS/opensource@github.com). | |||

| ## License | |||

| OctoDNS is licensed under the [MIT license](LICENSE). | |||

| ## Authors | |||

| OctoDNS was designed and authored by [Ross McFarland](https://github.com/ross) and [Joe Williams](https://github.com/joewilliams). It is now maintained, reviewed, and tested by Ross, Joe, and the rest of the Site Reliability Engineering team at GitHub. | |||

BIN

docs/assets/deploy.png

View File

BIN

docs/assets/noop.png

View File

BIN

docs/assets/pr.png

View File

+ 8

- 0

octodns/__init__.py

View File

| @ -0,0 +1,8 @@ | |||

| ''' | |||

| OctoDNS: DNS as code - Tools for managing DNS across multiple providers | |||

| ''' | |||

| from __future__ import absolute_import, division, print_function, \ | |||

| unicode_literals | |||

| __VERSION__ = '0.8.0' | |||

+ 6

- 0

octodns/cmds/__init__.py

View File

| @ -0,0 +1,6 @@ | |||

| # | |||

| # | |||

| # | |||

| from __future__ import absolute_import, division, print_function, \ | |||

| unicode_literals | |||

+ 69

- 0

octodns/cmds/args.py

View File

| @ -0,0 +1,69 @@ | |||

| # | |||

| # | |||

| # | |||

| from __future__ import absolute_import, division, print_function, \ | |||

| unicode_literals | |||

| from argparse import ArgumentParser as _Base | |||

| from logging import DEBUG, INFO, WARN, Formatter, StreamHandler, \ | |||

| getLogger | |||

| from logging.handlers import SysLogHandler | |||

| from sys import stderr, stdout | |||

| class ArgumentParser(_Base): | |||

| ''' | |||

| Manages argument parsing and adds some defaults and takes action on them. | |||

| Also manages logging setup. | |||

| ''' | |||

| def __init__(self, *args, **kwargs): | |||

| super(ArgumentParser, self).__init__(*args, **kwargs) | |||

| def parse_args(self, default_log_level=INFO): | |||

| self.add_argument('--log-stream-stdout', action='store_true', | |||

| default=False, | |||

| help='Log to stdout instead of stderr') | |||

| _help = 'Send logging data to syslog in addition to stderr' | |||

| self.add_argument('--log-syslog', action='store_true', default=False, | |||

| help=_help) | |||

| self.add_argument('--syslog-device', default='/dev/log', | |||

| help='Syslog device') | |||

| self.add_argument('--syslog-facility', default='local0', | |||

| help='Syslog facility') | |||

| _help = 'Increase verbosity to get details and help track down issues' | |||

| self.add_argument('--debug', action='store_true', default=False, | |||

| help=_help) | |||

| args = super(ArgumentParser, self).parse_args() | |||

| self._setup_logging(args, default_log_level) | |||

| return args | |||

| def _setup_logging(self, args, default_log_level): | |||

| # TODO: if/when things are multi-threaded add [%(thread)d] in to the | |||

| # format | |||

| fmt = '%(asctime)s %(levelname)-5s %(name)s %(message)s' | |||

| formatter = Formatter(fmt=fmt, datefmt='%Y-%m-%dT%H:%M:%S ') | |||

| stream = stdout if args.log_stream_stdout else stderr | |||

| handler = StreamHandler(stream=stream) | |||

| handler.setFormatter(formatter) | |||

| logger = getLogger() | |||

| logger.addHandler(handler) | |||

| if args.log_syslog: | |||

| fmt = 'octodns[%(process)-5s:%(thread)d]: %(name)s ' \ | |||

| '%(levelname)-5s %(message)s' | |||

| handler = SysLogHandler(address=args.syslog_device, | |||

| facility=args.syslog_facility) | |||

| handler.setFormatter(Formatter(fmt=fmt)) | |||

| logger.addHandler(handler) | |||

| logger.level = DEBUG if args.debug else default_log_level | |||

| # boto is noisy, set it to warn | |||

| getLogger('botocore').level = WARN | |||

| # DynectSession is noisy too | |||

| getLogger('DynectSession').level = WARN | |||

+ 29

- 0

octodns/cmds/compare.py

View File

| @ -0,0 +1,29 @@ | |||

| #!/usr/bin/env python | |||

| ''' | |||

| Octo-DNS Comparator | |||

| ''' | |||

| from __future__ import absolute_import, division, print_function, \ | |||

| unicode_literals | |||

| from pprint import pprint | |||

| from octodns.cmds.args import ArgumentParser | |||

| from octodns.manager import Manager | |||

| parser = ArgumentParser(description=__doc__.split('\n')[1]) | |||

| parser.add_argument('--config-file', required=True, | |||

| help='The Manager configuration file to use') | |||

| parser.add_argument('--a', nargs='+', required=True, | |||

| help='First source(s) to pull data from') | |||

| parser.add_argument('--b', nargs='+', required=True, | |||

| help='Second source(s) to pull data from') | |||

| parser.add_argument('--zone', default=None, required=True, | |||

| help='Zone to compare') | |||

| args = parser.parse_args() | |||

| manager = Manager(args.config_file) | |||

| changes = manager.compare(args.a, args.b, args.zone) | |||

| pprint(changes) | |||

+ 25

- 0

octodns/cmds/dump.py

View File

| @ -0,0 +1,25 @@ | |||

| #!/usr/bin/env python | |||

| ''' | |||

| Octo-DNS Dumper | |||

| ''' | |||

| from __future__ import absolute_import, division, print_function, \ | |||

| unicode_literals | |||

| from octodns.cmds.args import ArgumentParser | |||

| from octodns.manager import Manager | |||

| parser = ArgumentParser(description=__doc__.split('\n')[1]) | |||

| parser.add_argument('--config-file', required=True, | |||

| help='The Manager configuration file to use') | |||

| parser.add_argument('--output-dir', required=True, | |||

| help='The directory into which the results will be ' | |||

| 'written (Note: will overwrite existing files)') | |||

| parser.add_argument('zone', help='Zone to dump') | |||

| parser.add_argument('source', nargs='+', help='Source(s) to pull data from') | |||

| args = parser.parse_args() | |||

| manager = Manager(args.config_file) | |||

| manager.dump(args.zone, args.output_dir, *args.source) | |||

+ 99

- 0

octodns/cmds/report.py

View File

| @ -0,0 +1,99 @@ | |||

| #!/usr/bin/env python | |||

| ''' | |||

| Octo-DNS Reporter | |||

| ''' | |||

| from __future__ import absolute_import, division, print_function, \ | |||

| unicode_literals | |||

| from concurrent.futures import ThreadPoolExecutor | |||

| from dns.exception import Timeout | |||

| from dns.resolver import NXDOMAIN, NoAnswer, NoNameservers, Resolver, query | |||

| from logging import getLogger | |||

| from sys import stdout | |||

| import re | |||

| from octodns.cmds.args import ArgumentParser | |||

| from octodns.manager import Manager | |||

| from octodns.zone import Zone | |||

| class AsyncResolver(Resolver): | |||

| def __init__(self, num_workers, *args, **kwargs): | |||

| super(AsyncResolver, self).__init__(*args, **kwargs) | |||

| self.executor = ThreadPoolExecutor(max_workers=num_workers) | |||

| def query(self, *args, **kwargs): | |||

| return self.executor.submit(super(AsyncResolver, self).query, *args, | |||

| **kwargs) | |||

| parser = ArgumentParser(description=__doc__.split('\n')[1]) | |||

| parser.add_argument('--config-file', required=True, | |||

| help='The Manager configuration file to use') | |||

| parser.add_argument('--zone', required=True, help='Zone to dump') | |||

| parser.add_argument('--source', required=True, default=[], action='append', | |||

| help='Source(s) to pull data from') | |||

| parser.add_argument('--num-workers', default=4, | |||

| help='Number of background workers') | |||

| parser.add_argument('--timeout', default=1, | |||

| help='Number seconds to wait for an answer') | |||

| parser.add_argument('server', nargs='+', help='Servers to query') | |||

| args = parser.parse_args() | |||

| manager = Manager(args.config_file) | |||

| log = getLogger('report') | |||

| try: | |||

| sources = [manager.providers[source] for source in args.source] | |||

| except KeyError as e: | |||

| raise Exception('Unknown source: {}'.format(e.args[0])) | |||

| zone = Zone(args.zone, manager.configured_sub_zones(args.zone)) | |||

| for source in sources: | |||

| source.populate(zone) | |||

| print('name,type,ttl,{},consistent'.format(','.join(args.server))) | |||

| resolvers = [] | |||

| ip_addr_re = re.compile(r'^[\d\.]+$') | |||

| for server in args.server: | |||

| resolver = AsyncResolver(configure=False, | |||

| num_workers=int(args.num_workers)) | |||

| if not ip_addr_re.match(server): | |||

| server = str(query(server, 'A')[0]) | |||

| log.info('server=%s', server) | |||

| resolver.nameservers = [server] | |||

| resolver.lifetime = int(args.timeout) | |||

| resolvers.append(resolver) | |||

| queries = {} | |||

| for record in sorted(zone.records): | |||

| queries[record] = [r.query(record.fqdn, record._type) | |||

| for r in resolvers] | |||

| for record, futures in sorted(queries.items(), key=lambda d: d[0]): | |||

| stdout.write(record.fqdn) | |||

| stdout.write(',') | |||

| stdout.write(record._type) | |||

| stdout.write(',') | |||

| stdout.write(str(record.ttl)) | |||

| compare = {} | |||

| for future in futures: | |||

| stdout.write(',') | |||

| try: | |||

| answers = [str(r) for r in future.result()] | |||

| except (NoAnswer, NoNameservers): | |||

| answers = ['*no answer*'] | |||

| except NXDOMAIN: | |||

| answers = ['*does not exist*'] | |||

| except Timeout: | |||

| answers = ['*timeout*'] | |||

| stdout.write(' '.join(answers)) | |||

| # sorting to ignore order | |||

| answers = '*:*'.join(sorted(answers)).lower() | |||

| compare[answers] = True | |||

| stdout.write(',True\n' if len(compare) == 1 else ',False\n') | |||

+ 37

- 0

octodns/cmds/sync.py

View File

| @ -0,0 +1,37 @@ | |||

| #!/usr/bin/env python | |||

| ''' | |||

| Octo-DNS Multiplexer | |||

| ''' | |||

| from __future__ import absolute_import, division, print_function, \ | |||

| unicode_literals | |||

| from octodns.cmds.args import ArgumentParser | |||

| from octodns.manager import Manager | |||

| parser = ArgumentParser(description=__doc__.split('\n')[1]) | |||

| parser.add_argument('--config-file', required=True, | |||

| help='The Manager configuration file to use') | |||

| parser.add_argument('--doit', action='store_true', default=False, | |||

| help='Whether to take action or just show what would ' | |||

| 'change') | |||

| parser.add_argument('--force', action='store_true', default=False, | |||

| help='Acknowledge that significant changes are being made ' | |||

| 'and do them') | |||

| parser.add_argument('zone', nargs='*', default=[], | |||

| help='Limit sync to the specified zone(s)') | |||

| # --sources isn't an option here b/c filtering sources out would be super | |||

| # dangerous since you could eaily end up with an empty zone and delete | |||

| # everything, or even just part of things when there are multiple sources | |||

| parser.add_argument('--target', default=[], action='append', | |||

| help='Limit sync to the specified target(s)') | |||

| args = parser.parse_args() | |||

| manager = Manager(args.config_file) | |||

| manager.sync(eligible_zones=args.zone, eligible_targets=args.target, | |||

| dry_run=not args.doit, force=args.force) | |||

+ 22

- 0

octodns/cmds/validate.py

View File

| @ -0,0 +1,22 @@ | |||

| #!/usr/bin/env python | |||

| ''' | |||

| Octo-DNS Validator | |||

| ''' | |||

| from __future__ import absolute_import, division, print_function, \ | |||

| unicode_literals | |||

| from logging import WARN | |||

| from octodns.cmds.args import ArgumentParser | |||

| from octodns.manager import Manager | |||

| parser = ArgumentParser(description=__doc__.split('\n')[1]) | |||

| parser.add_argument('--config-file', default='./config/production.yaml', | |||

| help='The Manager configuration file to use') | |||

| args = parser.parse_args(WARN) | |||

| manager = Manager(args.config_file) | |||

| manager.validate_configs() | |||

+ 309

- 0

octodns/manager.py

View File

| @ -0,0 +1,309 @@ | |||

| # | |||

| # | |||

| # | |||

| from __future__ import absolute_import, division, print_function, \ | |||

| unicode_literals | |||

| from StringIO import StringIO | |||

| from importlib import import_module | |||

| from os import environ | |||

| import logging | |||

| from .provider.base import BaseProvider | |||

| from .provider.yaml import YamlProvider | |||

| from .yaml import safe_load | |||

| from .zone import Zone | |||

| class _AggregateTarget(object): | |||

| id = 'aggregate' | |||

| def __init__(self, targets): | |||

| self.targets = targets | |||

| def supports(self, record): | |||

| for target in self.targets: | |||

| if not target.supports(record): | |||

| return False | |||

| return True | |||

| @property | |||

| def SUPPORTS_GEO(self): | |||

| for target in self.targets: | |||

| if not target.SUPPORTS_GEO: | |||

| return False | |||

| return True | |||

| class Manager(object): | |||

| log = logging.getLogger('Manager') | |||

| def __init__(self, config_file): | |||

| self.log.info('__init__: config_file=%s', config_file) | |||

| # Read our config file | |||

| with open(config_file, 'r') as fh: | |||

| self.config = safe_load(fh, enforce_order=False) | |||

| self.log.debug('__init__: configuring providers') | |||

| self.providers = {} | |||

| for provider_name, provider_config in self.config['providers'].items(): | |||

| # Get our class and remove it from the provider_config | |||

| try: | |||

| _class = provider_config.pop('class') | |||

| except KeyError: | |||

| raise Exception('Provider {} is missing class' | |||

| .format(provider_name)) | |||

| _class = self._get_provider_class(_class) | |||

| # Build up the arguments we need to pass to the provider | |||

| kwargs = {} | |||

| for k, v in provider_config.items(): | |||

| try: | |||

| if v.startswith('env/'): | |||

| try: | |||

| env_var = v[4:] | |||

| v = environ[env_var] | |||

| except KeyError: | |||

| raise Exception('Incorrect provider config, ' | |||

| 'missing env var {}' | |||

| .format(env_var)) | |||

| except AttributeError: | |||

| pass | |||

| kwargs[k] = v | |||

| try: | |||

| self.providers[provider_name] = _class(provider_name, **kwargs) | |||

| except TypeError: | |||

| raise Exception('Incorrect provider config for {}' | |||

| .format(provider_name)) | |||

| zone_tree = {} | |||

| # sort by reversed strings so that parent zones always come first | |||

| for name in sorted(self.config['zones'].keys(), key=lambda s: s[::-1]): | |||

| # ignore trailing dots, and reverse | |||

| pieces = name[:-1].split('.')[::-1] | |||

| # where starts out at the top | |||

| where = zone_tree | |||

| # for all the pieces | |||

| for piece in pieces: | |||

| try: | |||

| where = where[piece] | |||

| # our current piece already exists, just point where at | |||

| # it's value | |||

| except KeyError: | |||

| # our current piece doesn't exist, create it | |||

| where[piece] = {} | |||

| # and then point where at it's newly created value | |||

| where = where[piece] | |||

| self.zone_tree = zone_tree | |||

| def _get_provider_class(self, _class): | |||

| try: | |||

| module_name, class_name = _class.rsplit('.', 1) | |||

| module = import_module(module_name) | |||

| except (ImportError, ValueError): | |||

| self.log.error('_get_provider_class: Unable to import module %s', | |||

| _class) | |||

| raise Exception('Unknown provider class: {}'.format(_class)) | |||

| try: | |||

| return getattr(module, class_name) | |||

| except AttributeError: | |||

| self.log.error('_get_provider_class: Unable to get class %s from ' | |||

| 'module %s', class_name, module) | |||

| raise Exception('Unknown provider class: {}'.format(_class)) | |||

| def configured_sub_zones(self, zone_name): | |||

| # Reversed pieces of the zone name | |||

| pieces = zone_name[:-1].split('.')[::-1] | |||

| # Point where at the root of the tree | |||

| where = self.zone_tree | |||

| # Until we've hit the bottom of this zone | |||

| try: | |||

| while pieces: | |||

| # Point where at the value of our current piece | |||

| where = where[pieces.pop(0)] | |||

| except KeyError: | |||

| self.log.debug('configured_sub_zones: unknown zone, %s, no subs', | |||

| zone_name) | |||

| return set() | |||

| # We're not pointed at the dict for our name, the keys of which will be | |||

| # any subzones | |||

| sub_zone_names = where.keys() | |||

| self.log.debug('configured_sub_zones: subs=%s', sub_zone_names) | |||

| return set(sub_zone_names) | |||

| def sync(self, eligible_zones=[], eligible_targets=[], dry_run=True, | |||

| force=False): | |||

| self.log.info('sync: eligible_zones=%s, eligible_targets=%s, ' | |||

| 'dry_run=%s, force=%s', eligible_zones, eligible_targets, | |||

| dry_run, force) | |||

| zones = self.config['zones'].items() | |||

| if eligible_zones: | |||

| zones = filter(lambda d: d[0] in eligible_zones, zones) | |||

| plans = [] | |||

| for zone_name, config in zones: | |||

| self.log.info('sync: zone=%s', zone_name) | |||

| try: | |||

| sources = config['sources'] | |||

| except KeyError: | |||

| raise Exception('Zone {} is missing sources'.format(zone_name)) | |||

| try: | |||

| targets = config['targets'] | |||

| except KeyError: | |||

| raise Exception('Zone {} is missing targets'.format(zone_name)) | |||

| if eligible_targets: | |||

| targets = filter(lambda d: d in eligible_targets, targets) | |||

| self.log.info('sync: sources=%s -> targets=%s', sources, targets) | |||

| try: | |||

| sources = [self.providers[source] for source in sources] | |||

| except KeyError: | |||

| raise Exception('Zone {}, unknown source: {}'.format(zone_name, | |||

| source)) | |||

| try: | |||

| trgs = [] | |||

| for target in targets: | |||

| trg = self.providers[target] | |||

| if not isinstance(trg, BaseProvider): | |||

| raise Exception('{} - "{}" does not support targeting' | |||

| .format(trg, target)) | |||

| trgs.append(trg) | |||

| targets = trgs | |||

| except KeyError: | |||

| raise Exception('Zone {}, unknown target: {}'.format(zone_name, | |||

| target)) | |||

| self.log.debug('sync: populating') | |||

| zone = Zone(zone_name, | |||

| sub_zones=self.configured_sub_zones(zone_name)) | |||

| for source in sources: | |||

| source.populate(zone) | |||

| self.log.debug('sync: planning') | |||

| for target in targets: | |||

| plan = target.plan(zone) | |||

| if plan: | |||

| plans.append((target, plan)) | |||

| hr = '*************************************************************' \ | |||

| '*******************\n' | |||

| buf = StringIO() | |||

| buf.write('\n') | |||

| if plans: | |||

| current_zone = None | |||

| for target, plan in plans: | |||

| if plan.desired.name != current_zone: | |||

| current_zone = plan.desired.name | |||

| buf.write(hr) | |||

| buf.write('* ') | |||

| buf.write(current_zone) | |||

| buf.write('\n') | |||

| buf.write(hr) | |||

| buf.write('* ') | |||

| buf.write(target.id) | |||

| buf.write(' (') | |||

| buf.write(target) | |||

| buf.write(')\n* ') | |||

| for change in plan.changes: | |||

| buf.write(change.__repr__(leader='* ')) | |||

| buf.write('\n* ') | |||

| buf.write('Summary: ') | |||

| buf.write(plan) | |||

| buf.write('\n') | |||

| else: | |||

| buf.write(hr) | |||

| buf.write('No changes were planned\n') | |||

| buf.write(hr) | |||

| buf.write('\n') | |||

| self.log.info(buf.getvalue()) | |||

| if not force: | |||

| self.log.debug('sync: checking safety') | |||

| for target, plan in plans: | |||

| plan.raise_if_unsafe() | |||

| if dry_run or config.get('always-dry-run', False): | |||

| return 0 | |||

| total_changes = 0 | |||

| self.log.debug('sync: applying') | |||

| for target, plan in plans: | |||

| total_changes += target.apply(plan) | |||

| self.log.info('sync: %d total changes', total_changes) | |||

| return total_changes | |||

| def compare(self, a, b, zone): | |||

| ''' | |||

| Compare zone data between 2 sources. | |||

| Note: only things supported by both sources will be considered | |||

| ''' | |||

| self.log.info('compare: a=%s, b=%s, zone=%s', a, b, zone) | |||

| try: | |||

| a = [self.providers[source] for source in a] | |||

| b = [self.providers[source] for source in b] | |||

| except KeyError as e: | |||

| raise Exception('Unknown source: {}'.format(e.args[0])) | |||

| sub_zones = self.configured_sub_zones(zone) | |||

| za = Zone(zone, sub_zones) | |||

| for source in a: | |||

| source.populate(za) | |||

| zb = Zone(zone, sub_zones) | |||

| for source in b: | |||

| source.populate(zb) | |||

| return zb.changes(za, _AggregateTarget(a + b)) | |||

| def dump(self, zone, output_dir, source, *sources): | |||

| ''' | |||

| Dump zone data from the specified source | |||

| ''' | |||

| self.log.info('dump: zone=%s, sources=%s', zone, sources) | |||

| # We broke out source to force at least one to be passed, add it to any | |||

| # others we got. | |||

| sources = [source] + list(sources) | |||

| try: | |||

| sources = [self.providers[s] for s in sources] | |||

| except KeyError as e: | |||

| raise Exception('Unknown source: {}'.format(e.args[0])) | |||

| target = YamlProvider('dump', output_dir) | |||

| zone = Zone(zone, self.configured_sub_zones(zone)) | |||

| for source in sources: | |||

| source.populate(zone) | |||

| plan = target.plan(zone) | |||

| target.apply(plan) | |||

| def validate_configs(self): | |||

| for zone_name, config in self.config['zones'].items(): | |||

| zone = Zone(zone_name, self.configured_sub_zones(zone_name)) | |||

| try: | |||

| sources = config['sources'] | |||

| except KeyError: | |||

| raise Exception('Zone {} is missing sources'.format(zone_name)) | |||

| try: | |||

| sources = [self.providers[source] for source in sources] | |||

| except KeyError: | |||

| raise Exception('Zone {}, unknown source: {}'.format(zone_name, | |||

| source)) | |||

| for source in sources: | |||

| if isinstance(source, YamlProvider): | |||

| source.populate(zone) | |||

+ 6

- 0

octodns/provider/__init__.py

View File

| @ -0,0 +1,6 @@ | |||

| # | |||

| # | |||

| # | |||

| from __future__ import absolute_import, division, print_function, \ | |||

| unicode_literals | |||

+ 116

- 0

octodns/provider/base.py

View File

| @ -0,0 +1,116 @@ | |||

| # | |||

| # | |||

| # | |||

| from __future__ import absolute_import, division, print_function, \ | |||

| unicode_literals | |||

| from ..source.base import BaseSource | |||

| from ..zone import Zone | |||

| class UnsafePlan(Exception): | |||

| pass | |||

| class Plan(object): | |||

| MAX_SAFE_UPDATES = 4 | |||

| MAX_SAFE_DELETES = 4 | |||

| def __init__(self, existing, desired, changes): | |||

| self.existing = existing | |||

| self.desired = desired | |||

| self.changes = changes | |||

| change_counts = { | |||

| 'Create': 0, | |||

| 'Delete': 0, | |||

| 'Update': 0 | |||

| } | |||

| for change in changes: | |||

| change_counts[change.__class__.__name__] += 1 | |||

| self.change_counts = change_counts | |||

| def raise_if_unsafe(self): | |||

| # TODO: what is safe really? | |||

| if self.change_counts['Update'] > self.MAX_SAFE_UPDATES: | |||

| raise UnsafePlan('Too many updates') | |||

| if self.change_counts['Delete'] > self.MAX_SAFE_DELETES: | |||

| raise UnsafePlan('Too many deletes') | |||

| def __repr__(self): | |||

| return 'Creates={}, Updates={}, Deletes={}, Existing Records={}' \ | |||

| .format(self.change_counts['Create'], self.change_counts['Update'], | |||

| self.change_counts['Delete'], | |||

| len(self.existing.records)) | |||

| class BaseProvider(BaseSource): | |||

| def __init__(self, id, apply_disabled=False): | |||

| super(BaseProvider, self).__init__(id) | |||

| self.log.debug('__init__: id=%s, apply_disabled=%s', id, | |||

| apply_disabled) | |||

| self.apply_disabled = apply_disabled | |||

| def _include_change(self, change): | |||

| ''' | |||

| An opportunity for providers to filter out false positives due to | |||

| pecularities in their implementation. E.g. minimum TTLs. | |||

| ''' | |||

| return True | |||

| def _extra_changes(self, existing, changes): | |||

| ''' | |||

| An opportunity for providers to add extra changes to the plan that are | |||

| necessary to update ancilary record data or configure the zone. E.g. | |||

| base NS records. | |||

| ''' | |||

| return [] | |||

| def plan(self, desired): | |||

| self.log.info('plan: desired=%s', desired.name) | |||

| existing = Zone(desired.name, desired.sub_zones) | |||

| self.populate(existing, target=True) | |||

| # compute the changes at the zone/record level | |||

| changes = existing.changes(desired, self) | |||

| # allow the provider to filter out false positives | |||

| before = len(changes) | |||

| changes = filter(self._include_change, changes) | |||

| after = len(changes) | |||

| if before != after: | |||

| self.log.info('plan: filtered out %s changes', before - after) | |||

| # allow the provider to add extra changes it needs | |||

| extra = self._extra_changes(existing, changes) | |||

| if extra: | |||

| self.log.info('plan: extra changes\n %s', '\n ' | |||

| .join([str(c) for c in extra])) | |||

| changes += extra | |||

| if changes: | |||

| plan = Plan(existing, desired, changes) | |||

| self.log.info('plan: %s', plan) | |||

| return plan | |||

| self.log.info('plan: No changes') | |||

| return None | |||

| def apply(self, plan): | |||

| ''' | |||

| Submits actual planned changes to the provider. Returns the number of | |||

| changes made | |||

| ''' | |||

| if self.apply_disabled: | |||

| self.log.info('apply: disabled') | |||

| return 0 | |||

| self.log.info('apply: making changes') | |||

| self._apply(plan) | |||

| return len(plan.changes) | |||

| def _apply(self, plan): | |||

| raise NotImplementedError('Abstract base class, _apply method ' | |||

| 'missing') | |||

+ 249

- 0

octodns/provider/cloudflare.py

View File

| @ -0,0 +1,249 @@ | |||

| # | |||

| # | |||

| # | |||

| from __future__ import absolute_import, division, print_function, \ | |||

| unicode_literals | |||

| from collections import defaultdict | |||

| from logging import getLogger | |||

| from requests import Session | |||

| from ..record import Record, Update | |||

| from .base import BaseProvider | |||

| class CloudflareAuthenticationError(Exception): | |||

| def __init__(self, data): | |||

| try: | |||

| message = data['errors'][0]['message'] | |||

| except (IndexError, KeyError): | |||

| message = 'Authentication error' | |||

| super(CloudflareAuthenticationError, self).__init__(message) | |||

| class CloudflareProvider(BaseProvider): | |||

| SUPPORTS_GEO = False | |||

| # TODO: support SRV | |||

| UNSUPPORTED_TYPES = ('NAPTR', 'PTR', 'SOA', 'SRV', 'SSHFP') | |||

| MIN_TTL = 120 | |||

| TIMEOUT = 15 | |||

| def __init__(self, id, email, token, *args, **kwargs): | |||

| self.log = getLogger('CloudflareProvider[{}]'.format(id)) | |||

| self.log.debug('__init__: id=%s, email=%s, token=***', id, email) | |||

| super(CloudflareProvider, self).__init__(id, *args, **kwargs) | |||

| sess = Session() | |||

| sess.headers.update({ | |||

| 'X-Auth-Email': email, | |||

| 'X-Auth-Key': token, | |||

| }) | |||

| self._sess = sess | |||

| self._zones = None | |||

| self._zone_records = {} | |||

| def supports(self, record): | |||

| return record._type not in self.UNSUPPORTED_TYPES | |||

| def _request(self, method, path, params=None, data=None): | |||

| self.log.debug('_request: method=%s, path=%s', method, path) | |||

| url = 'https://api.cloudflare.com/client/v4{}'.format(path) | |||

| resp = self._sess.request(method, url, params=params, json=data, | |||

| timeout=self.TIMEOUT) | |||

| self.log.debug('_request: status=%d', resp.status_code) | |||

| if resp.status_code == 403: | |||

| raise CloudflareAuthenticationError(resp.json()) | |||

| resp.raise_for_status() | |||

| return resp.json() | |||

| @property | |||

| def zones(self): | |||

| if self._zones is None: | |||

| page = 1 | |||

| zones = [] | |||

| while page: | |||

| resp = self._request('GET', '/zones', params={'page': page}) | |||

| zones += resp['result'] | |||

| info = resp['result_info'] | |||

| if info['count'] > 0 and info['count'] == info['per_page']: | |||

| page += 1 | |||

| else: | |||

| page = None | |||

| self._zones = {'{}.'.format(z['name']): z['id'] for z in zones} | |||

| return self._zones | |||

| def _data_for_multiple(self, _type, records): | |||

| return { | |||

| 'ttl': records[0]['ttl'], | |||

| 'type': _type, | |||

| 'values': [r['content'] for r in records], | |||

| } | |||

| _data_for_A = _data_for_multiple | |||

| _data_for_AAAA = _data_for_multiple | |||

| _data_for_SPF = _data_for_multiple | |||

| _data_for_TXT = _data_for_multiple | |||

| def _data_for_CNAME(self, _type, records): | |||

| only = records[0] | |||

| return { | |||

| 'ttl': only['ttl'], | |||

| 'type': _type, | |||

| 'value': '{}.'.format(only['content']) | |||

| } | |||

| def _data_for_MX(self, _type, records): | |||

| values = [] | |||

| for r in records: | |||

| values.append({ | |||

| 'priority': r['priority'], | |||

| 'value': '{}.'.format(r['content']), | |||

| }) | |||

| return { | |||

| 'ttl': records[0]['ttl'], | |||

| 'type': _type, | |||

| 'values': values, | |||

| } | |||

| def _data_for_NS(self, _type, records): | |||

| return { | |||

| 'ttl': records[0]['ttl'], | |||

| 'type': _type, | |||

| 'values': ['{}.'.format(r['content']) for r in records], | |||

| } | |||

| def zone_records(self, zone): | |||

| if zone.name not in self._zone_records: | |||

| zone_id = self.zones.get(zone.name, False) | |||

| if not zone_id: | |||

| return [] | |||

| records = [] | |||

| path = '/zones/{}/dns_records'.format(zone_id) | |||

| page = 1 | |||

| while page: | |||

| resp = self._request('GET', path, params={'page': page}) | |||

| records += resp['result'] | |||

| info = resp['result_info'] | |||

| if info['count'] > 0 and info['count'] == info['per_page']: | |||

| page += 1 | |||

| else: | |||

| page = None | |||

| self._zone_records[zone.name] = records | |||

| return self._zone_records[zone.name] | |||

| def populate(self, zone, target=False): | |||

| self.log.debug('populate: name=%s', zone.name) | |||

| before = len(zone.records) | |||

| records = self.zone_records(zone) | |||

| if records: | |||

| values = defaultdict(lambda: defaultdict(list)) | |||

| for record in records: | |||

| name = zone.hostname_from_fqdn(record['name']) | |||

| _type = record['type'] | |||

| if _type not in self.UNSUPPORTED_TYPES: | |||

| values[name][record['type']].append(record) | |||

| for name, types in values.items(): | |||

| for _type, records in types.items(): | |||

| data_for = getattr(self, '_data_for_{}'.format(_type)) | |||

| data = data_for(_type, records) | |||

| record = Record.new(zone, name, data, source=self) | |||

| zone.add_record(record) | |||

| self.log.info('populate: found %s records', | |||

| len(zone.records) - before) | |||

| def _include_change(self, change): | |||

| if isinstance(change, Update): | |||

| existing = change.existing.data | |||

| new = change.new.data | |||

| new['ttl'] = max(120, new['ttl']) | |||

| if new == existing: | |||

| return False | |||

| return True | |||

| def _contents_for_multiple(self, record): | |||

| for value in record.values: | |||

| yield {'content': value} | |||

| _contents_for_A = _contents_for_multiple | |||

| _contents_for_AAAA = _contents_for_multiple | |||

| _contents_for_NS = _contents_for_multiple | |||

| _contents_for_SPF = _contents_for_multiple | |||

| _contents_for_TXT = _contents_for_multiple | |||

| def _contents_for_CNAME(self, record): | |||

| yield {'content': record.value} | |||

| def _contents_for_MX(self, record): | |||

| for value in record.values: | |||

| yield { | |||

| 'priority': value.priority, | |||

| 'content': value.value | |||

| } | |||

| def _apply_Create(self, change): | |||

| new = change.new | |||

| zone_id = self.zones[new.zone.name] | |||

| contents_for = getattr(self, '_contents_for_{}'.format(new._type)) | |||

| path = '/zones/{}/dns_records'.format(zone_id) | |||

| name = new.fqdn[:-1] | |||

| for content in contents_for(change.new): | |||

| content.update({ | |||

| 'name': name, | |||

| 'type': new._type, | |||

| # Cloudflare has a min ttl of 120s | |||

| 'ttl': max(self.MIN_TTL, new.ttl), | |||

| }) | |||

| self._request('POST', path, data=content) | |||

| def _apply_Update(self, change): | |||

| # Create the new and delete the old | |||

| self._apply_Create(change) | |||

| self._apply_Delete(change) | |||

| def _apply_Delete(self, change): | |||

| existing = change.existing | |||

| existing_name = existing.fqdn[:-1] | |||

| for record in self.zone_records(existing.zone): | |||

| if existing_name == record['name'] and \ | |||

| existing._type == record['type']: | |||

| path = '/zones/{}/dns_records/{}'.format(record['zone_id'], | |||

| record['id']) | |||

| self._request('DELETE', path) | |||

| def _apply(self, plan): | |||

| desired = plan.desired | |||

| changes = plan.changes | |||

| self.log.debug('_apply: zone=%s, len(changes)=%d', desired.name, | |||

| len(changes)) | |||

| name = desired.name | |||

| if name not in self.zones: | |||

| self.log.debug('_apply: no matching zone, creating') | |||

| data = { | |||

| 'name': name[:-1], | |||

| 'jump_start': False, | |||

| } | |||

| resp = self._request('POST', '/zones', data=data) | |||

| zone_id = resp['result']['id'] | |||

| self.zones[name] = zone_id | |||

| self._zone_records[name] = {} | |||

| for change in changes: | |||

| class_name = change.__class__.__name__ | |||

| getattr(self, '_apply_{}'.format(class_name))(change) | |||

| # clear the cache | |||

| self._zone_records.pop(name, None) | |||

+ 349

- 0

octodns/provider/dnsimple.py

View File

| @ -0,0 +1,349 @@ | |||

| # | |||

| # | |||

| # | |||

| from __future__ import absolute_import, division, print_function, \ | |||

| unicode_literals | |||

| from collections import defaultdict | |||

| from requests import Session | |||

| import logging | |||

| from ..record import Record | |||

| from .base import BaseProvider | |||

| class DnsimpleClientException(Exception): | |||

| pass | |||

| class DnsimpleClientNotFound(DnsimpleClientException): | |||

| def __init__(self): | |||

| super(DnsimpleClientNotFound, self).__init__('Not found') | |||

| class DnsimpleClientUnauthorized(DnsimpleClientException): | |||

| def __init__(self): | |||

| super(DnsimpleClientUnauthorized, self).__init__('Unauthorized') | |||

| class DnsimpleClient(object): | |||

| BASE = 'https://api.dnsimple.com/v2/' | |||

| def __init__(self, token, account): | |||

| self.account = account | |||

| sess = Session() | |||

| sess.headers.update({'Authorization': 'Bearer {}'.format(token)}) | |||

| self._sess = sess | |||

| def _request(self, method, path, params=None, data=None): | |||

| url = '{}{}{}'.format(self.BASE, self.account, path) | |||

| resp = self._sess.request(method, url, params=params, json=data) | |||

| if resp.status_code == 401: | |||

| raise DnsimpleClientUnauthorized() | |||

| if resp.status_code == 404: | |||

| raise DnsimpleClientNotFound() | |||

| resp.raise_for_status() | |||

| return resp | |||

| def domain(self, name): | |||

| path = '/domains/{}'.format(name) | |||

| return self._request('GET', path).json() | |||

| def domain_create(self, name): | |||

| return self._request('POST', '/domains', data={'name': name}) | |||

| def records(self, zone_name): | |||

| ret = [] | |||

| page = 1 | |||

| while True: | |||

| data = self._request('GET', '/zones/{}/records'.format(zone_name), | |||

| {'page': page}).json() | |||

| ret += data['data'] | |||

| pagination = data['pagination'] | |||

| if page >= pagination['total_pages']: | |||

| break | |||

| page += 1 | |||

| return ret | |||

| def record_create(self, zone_name, params): | |||

| path = '/zones/{}/records'.format(zone_name) | |||

| self._request('POST', path, data=params) | |||

| def record_delete(self, zone_name, record_id): | |||

| path = '/zones/{}/records/{}'.format(zone_name, record_id) | |||

| self._request('DELETE', path) | |||

| class DnsimpleProvider(BaseProvider): | |||

| SUPPORTS_GEO = False | |||

| def __init__(self, id, token, account, *args, **kwargs): | |||

| self.log = logging.getLogger('DnsimpleProvider[{}]'.format(id)) | |||

| self.log.debug('__init__: id=%s, token=***, account=%s', id, account) | |||

| super(DnsimpleProvider, self).__init__(id, *args, **kwargs) | |||

| self._client = DnsimpleClient(token, account) | |||

| self._zone_records = {} | |||

| def _data_for_multiple(self, _type, records): | |||

| return { | |||

| 'ttl': records[0]['ttl'], | |||

| 'type': _type, | |||

| 'values': [r['content'] for r in records] | |||

| } | |||

| _data_for_A = _data_for_multiple | |||

| _data_for_AAAA = _data_for_multiple | |||

| _data_for_SPF = _data_for_multiple | |||

| _data_for_TXT = _data_for_multiple | |||

| def _data_for_CNAME(self, _type, records): | |||

| record = records[0] | |||

| return { | |||

| 'ttl': record['ttl'], | |||

| 'type': _type, | |||

| 'value': '{}.'.format(record['content']) | |||

| } | |||

| def _data_for_MX(self, _type, records): | |||

| values = [] | |||

| for record in records: | |||

| values.append({ | |||

| 'priority': record['priority'], | |||

| 'value': '{}.'.format(record['content']) | |||

| }) | |||

| return { | |||

| 'ttl': records[0]['ttl'], | |||

| 'type': _type, | |||

| 'values': values | |||

| } | |||

| def _data_for_NAPTR(self, _type, records): | |||

| values = [] | |||

| for record in records: | |||

| try: | |||

| order, preference, flags, service, regexp, replacement = \ | |||

| record['content'].split(' ', 5) | |||

| except ValueError: | |||

| # their api will let you create invalid records, this | |||

| # essnetially handles that by ignoring them for values | |||

| # purposes. That will cause updates to happen to delete them if | |||

| # they shouldn't exist or update them if they're wrong | |||

| continue | |||

| values.append({ | |||

| 'flags': flags[1:-1], | |||

| 'order': order, | |||

| 'preference': preference, | |||

| 'regexp': regexp[1:-1], | |||

| 'replacement': replacement, | |||

| 'service': service[1:-1], | |||

| }) | |||

| return { | |||

| 'type': _type, | |||

| 'ttl': records[0]['ttl'], | |||

| 'values': values | |||

| } | |||

| def _data_for_NS(self, _type, records): | |||

| values = [] | |||

| for record in records: | |||

| content = record['content'] | |||

| if content[-1] != '.': | |||

| content = '{}.'.format(content) | |||

| values.append(content) | |||

| return { | |||

| 'ttl': records[0]['ttl'], | |||

| 'type': _type, | |||

| 'values': values, | |||

| } | |||

| def _data_for_PTR(self, _type, records): | |||

| record = records[0] | |||

| return { | |||

| 'ttl': record['ttl'], | |||

| 'type': _type, | |||

| 'value': record['content'] | |||

| } | |||

| def _data_for_SRV(self, _type, records): | |||

| values = [] | |||

| for record in records: | |||

| try: | |||

| weight, port, target = record['content'].split(' ', 2) | |||

| except ValueError: | |||

| # see _data_for_NAPTR's continue | |||

| continue | |||

| values.append({ | |||

| 'port': port, | |||

| 'priority': record['priority'], | |||

| 'target': '{}.'.format(target), | |||

| 'weight': weight | |||

| }) | |||

| return { | |||

| 'type': _type, | |||

| 'ttl': records[0]['ttl'], | |||

| 'values': values | |||

| } | |||

| def _data_for_SSHFP(self, _type, records): | |||

| values = [] | |||

| for record in records: | |||

| try: | |||

| algorithm, fingerprint_type, fingerprint = \ | |||

| record['content'].split(' ', 2) | |||

| except ValueError: | |||

| # see _data_for_NAPTR's continue | |||

| continue | |||

| values.append({ | |||

| 'algorithm': algorithm, | |||

| 'fingerprint': fingerprint, | |||

| 'fingerprint_type': fingerprint_type | |||

| }) | |||

| return { | |||

| 'type': _type, | |||

| 'ttl': records[0]['ttl'], | |||

| 'values': values | |||

| } | |||

| def zone_records(self, zone): | |||

| if zone.name not in self._zone_records: | |||

| try: | |||

| self._zone_records[zone.name] = \ | |||

| self._client.records(zone.name[:-1]) | |||

| except DnsimpleClientNotFound: | |||

| return [] | |||

| return self._zone_records[zone.name] | |||

| def populate(self, zone, target=False): | |||

| self.log.debug('populate: name=%s', zone.name) | |||

| values = defaultdict(lambda: defaultdict(list)) | |||

| for record in self.zone_records(zone): | |||

| _type = record['type'] | |||

| if _type == 'SOA': | |||

| continue | |||

| values[record['name']][record['type']].append(record) | |||

| before = len(zone.records) | |||

| for name, types in values.items(): | |||

| for _type, records in types.items(): | |||

| data_for = getattr(self, '_data_for_{}'.format(_type)) | |||

| record = Record.new(zone, name, data_for(_type, records)) | |||

| zone.add_record(record) | |||

| self.log.info('populate: found %s records', | |||

| len(zone.records) - before) | |||

| def _params_for_multiple(self, record): | |||

| for value in record.values: | |||

| yield { | |||

| 'content': value, | |||

| 'name': record.name, | |||

| 'ttl': record.ttl, | |||

| 'type': record._type, | |||

| } | |||

| _params_for_A = _params_for_multiple | |||

| _params_for_AAAA = _params_for_multiple | |||

| _params_for_NS = _params_for_multiple | |||

| _params_for_SPF = _params_for_multiple | |||

| _params_for_TXT = _params_for_multiple | |||

| def _params_for_single(self, record): | |||

| yield { | |||

| 'content': record.value, | |||

| 'name': record.name, | |||

| 'ttl': record.ttl, | |||

| 'type': record._type | |||

| } | |||

| _params_for_CNAME = _params_for_single | |||

| _params_for_PTR = _params_for_single | |||

| def _params_for_MX(self, record): | |||

| for value in record.values: | |||

| yield { | |||

| 'content': value.value, | |||

| 'name': record.name, | |||

| 'priority': value.priority, | |||

| 'ttl': record.ttl, | |||

| 'type': record._type | |||

| } | |||

| def _params_for_NAPTR(self, record): | |||

| for value in record.values: | |||

| content = '{} {} "{}" "{}" "{}" {}' \ | |||

| .format(value.order, value.preference, value.flags, | |||

| value.service, value.regexp, value.replacement) | |||

| yield { | |||

| 'content': content, | |||

| 'name': record.name, | |||

| 'ttl': record.ttl, | |||

| 'type': record._type | |||

| } | |||

| def _params_for_SRV(self, record): | |||

| for value in record.values: | |||

| yield { | |||

| 'content': '{} {} {}'.format(value.weight, value.port, | |||

| value.target), | |||

| 'name': record.name, | |||

| 'priority': value.priority, | |||

| 'ttl': record.ttl, | |||

| 'type': record._type | |||

| } | |||

| def _params_for_SSHFP(self, record): | |||

| for value in record.values: | |||

| yield { | |||

| 'content': '{} {} {}'.format(value.algorithm, | |||

| value.fingerprint_type, | |||

| value.fingerprint), | |||

| 'name': record.name, | |||

| 'ttl': record.ttl, | |||

| 'type': record._type | |||

| } | |||

| def _apply_Create(self, change): | |||

| new = change.new | |||

| params_for = getattr(self, '_params_for_{}'.format(new._type)) | |||

| for params in params_for(new): | |||

| self._client.record_create(new.zone.name[:-1], params) | |||

| def _apply_Update(self, change): | |||

| self._apply_Create(change) | |||

| self._apply_Delete(change) | |||

| def _apply_Delete(self, change): | |||

| existing = change.existing | |||

| zone = existing.zone | |||

| for record in self.zone_records(zone): | |||

| if existing.name == record['name'] and \ | |||

| existing._type == record['type']: | |||

| self._client.record_delete(zone.name[:-1], record['id']) | |||

| def _apply(self, plan): | |||

| desired = plan.desired | |||

| changes = plan.changes | |||

| self.log.debug('_apply: zone=%s, len(changes)=%d', desired.name, | |||

| len(changes)) | |||

| domain_name = desired.name[:-1] | |||

| try: | |||

| self._client.domain(domain_name) | |||

| except DnsimpleClientNotFound: | |||

| self.log.debug('_apply: no matching zone, creating domain') | |||

| self._client.domain_create(domain_name) | |||

| for change in changes: | |||

| class_name = change.__class__.__name__ | |||

| getattr(self, '_apply_{}'.format(class_name))(change) | |||

| # Clear out the cache if any | |||

| self._zone_records.pop(desired.name, None) | |||

+ 651

- 0

octodns/provider/dyn.py

View File

| @ -0,0 +1,651 @@ | |||

| # | |||

| # | |||

| # | |||

| from __future__ import absolute_import, division, print_function, \ | |||

| unicode_literals | |||

| from collections import defaultdict | |||

| from dyn.tm.errors import DynectGetError | |||

| from dyn.tm.services.dsf import DSFARecord, DSFAAAARecord, DSFFailoverChain, \ | |||

| DSFMonitor, DSFNode, DSFRecordSet, DSFResponsePool, DSFRuleset, \ | |||

| TrafficDirector, get_all_dsf_monitors, get_all_dsf_services, \ | |||

| get_response_pool | |||

| from dyn.tm.session import DynectSession | |||

| from dyn.tm.zones import Zone as DynZone | |||

| from logging import getLogger | |||

| from uuid import uuid4 | |||

| from ..record import Record | |||

| from .base import BaseProvider | |||

| class _CachingDynZone(DynZone): | |||

| log = getLogger('_CachingDynZone') | |||

| _cache = {} | |||

| @classmethod | |||

| def get(cls, zone_name, create=False): | |||

| cls.log.debug('get: zone_name=%s, create=%s', zone_name, create) | |||

| # This works in dyn zone names, without the trailing . | |||

| try: | |||

| dyn_zone = cls._cache[zone_name] | |||

| cls.log.debug('get: cache hit') | |||

| except KeyError: | |||

| cls.log.debug('get: cache miss') | |||

| try: | |||

| dyn_zone = _CachingDynZone(zone_name) | |||

| cls.log.debug('get: fetched') | |||

| except DynectGetError: | |||

| if not create: | |||

| cls.log.debug("get: does't exist") | |||

| return None | |||

| # this value shouldn't really matter, it's not tied to | |||

| # whois or anything | |||

| hostname = 'hostmaster@{}'.format(zone_name[:-1]) | |||

| # Try again with the params necessary to create | |||

| dyn_zone = _CachingDynZone(zone_name, ttl=3600, | |||

| contact=hostname, | |||

| serial_style='increment') | |||

| cls.log.debug('get: created') | |||

| cls._cache[zone_name] = dyn_zone | |||

| return dyn_zone | |||

| @classmethod | |||

| def flush_zone(cls, zone_name): | |||

| '''Flushes the zone cache, if there is one''' | |||

| cls.log.debug('flush_zone: zone_name=%s', zone_name) | |||

| try: | |||

| del cls._cache[zone_name] | |||

| except KeyError: | |||

| pass | |||

| def __init__(self, zone_name, *args, **kwargs): | |||

| super(_CachingDynZone, self).__init__(zone_name, *args, **kwargs) | |||

| self.flush_cache() | |||

| def flush_cache(self): | |||

| self._cached_records = None | |||

| def get_all_records(self): | |||

| if self._cached_records is None: | |||

| self._cached_records = \ | |||

| super(_CachingDynZone, self).get_all_records() | |||

| return self._cached_records | |||

| def publish(self): | |||

| super(_CachingDynZone, self).publish() | |||

| self.flush_cache() | |||

| class DynProvider(BaseProvider): | |||

| RECORDS_TO_TYPE = { | |||

| 'a_records': 'A', | |||

| 'aaaa_records': 'AAAA', | |||

| 'cname_records': 'CNAME', | |||

| 'mx_records': 'MX', | |||

| 'naptr_records': 'NAPTR', | |||

| 'ns_records': 'NS', | |||

| 'ptr_records': 'PTR', | |||

| 'sshfp_records': 'SSHFP', | |||

| 'spf_records': 'SPF', | |||

| 'srv_records': 'SRV', | |||

| 'txt_records': 'TXT', | |||

| } | |||

| TYPE_TO_RECORDS = { | |||

| 'A': 'a_records', | |||

| 'AAAA': 'aaaa_records', | |||

| 'CNAME': 'cname_records', | |||

| 'MX': 'mx_records', | |||

| 'NAPTR': 'naptr_records', | |||

| 'NS': 'ns_records', | |||

| 'PTR': 'ptr_records', | |||

| 'SSHFP': 'sshfp_records', | |||

| 'SPF': 'spf_records', | |||

| 'SRV': 'srv_records', | |||

| 'TXT': 'txt_records', | |||

| } | |||

| # https://help.dyn.com/predefined-geotm-regions-groups/ | |||

| REGION_CODES = { | |||

| 'NA': 11, # Continental North America | |||

| 'SA': 12, # Continental South America | |||

| 'EU': 13, # Contentinal Europe | |||

| 'AF': 14, # Continental Africa | |||

| 'AS': 15, # Contentinal Asia | |||

| 'OC': 16, # Contentinal Austrailia/Oceania | |||

| 'AN': 17, # Continental Antartica | |||

| } | |||